TL;DR

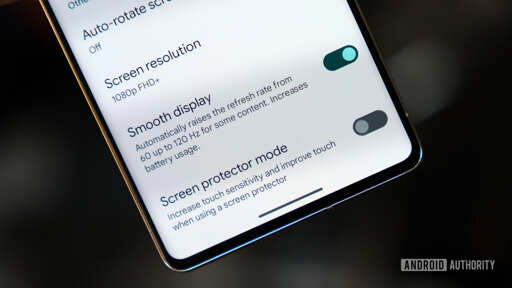

- The adaptive refresh rate (ARR) feature in Android 15 enables the display refresh rate to adapt to the frame rate of content.

- The ARR feature reduces power consumption and jank as it lets devices operate at lower refresh rates without the need for mode switching.

- While previous versions of Android supported multiple refresh rates, they did so by switching between discrete display modes.

Android Authority 's TL;DR (conveniently) doesn’t mention the actual downside to this update. But it’s fine imo, since this was actually a pretty insightful read.

My TL;DR:

This is not traditional VRR how we think of it. VRR how we think of it is changing the “frame rate” of the monitor to better suit the frame pacing of the received frames, this is not whats happening here. This is how things like freesync works. It takes your device framerate, say 60fps, and slows it down to better match the frame pacing of the content, say 48fps. Now the monitor doesn’t physically change states or anything, it just allows flexible updating to match the frame pacing.

You don;t get this with this adaptive refresh rate method

Here you are effectively getting a noop every refresh cycle it doesn’t need. It’s still good, but not as good as what most people think of as VRR (Freesync/vesa adaptivesync, gsync etc.). You are limited to the steps your display can output. For this to be useful you require a high refreshrate display like 120hz because each application needs to align with a frame refresh.

IE. say you have a 24fps video, the display won’t change it’s frame pacing, but rather you get a noop every 4 frames and a refresh, (24 * 5). Now assume you have a 90hz display, 24fps has no solid divisor in 90fps, so you have to either wait for sync, or get tearing. The first one leads to judder (which can probably be mitigated using offset sync waits?) the second one is well, tearing.